Background

2024 marks the first year of large-scale model deployment at the edge and endpoint levels. This year, leading smartphone manufacturers began embedding sub-3B (billion parameter) local large models in their flagship devices, demonstrating the commercial viability of such models.

In the broader IoT field, which boasts larger installations and broader applications, existing host modules such as NAS, NVRs, industrial PCs, edge computing gateways, or various SBCs (Single Board Computers) like Raspberry Pi 5 and Intel N100-based mini PCs, face challenges in upgrading to intelligent systems. These devices, using their limited CPU/GPU resources, struggle to efficiently run large models for multimodal inference, including Function Call or semantic understanding of visual data.

This not only prevents deployment of mainstream large models or more powerful multimodal models but also taxes the limited computational and bandwidth resources of the host, leading to unstable business operations and poor end-user experiences.

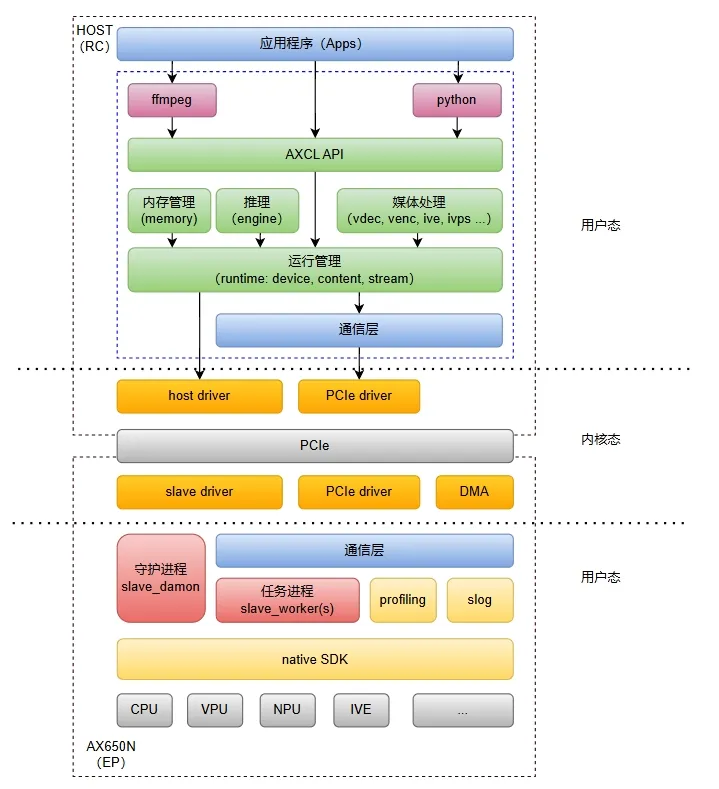

To address these pain points in industry-specific intelligent upgrades, we collaborated with hardware ecosystem partners to introduce the AX650N-based M.2 intelligent inference card. This solution aims to provide community developers with a more flexible, user-friendly way to expand edge AI computational capacity, fostering innovation in deploying advanced algorithms and large models on various platforms. This is a step toward making AI more accessible and inclusive.

Target Audience

The M.2 intelligent inference card aims to address the following issues for key market segments:

SBC Smart Upgrades

Single Board Computers (SBCs) like Raspberry Pi and similar domestic alternatives primarily use ARM-based CPUs. While their compute power isn't a disadvantage—given that SBCs are not specifically designed for AI applications—there are scenarios where AI capabilities are desired.

Most SBCs have M.2 2280 slots for expansion. With an M.2 intelligent inference card, developers can plug and play to extend their capabilities. While Raspberry Pi released an M.2 module based on Israel's Hailo chip earlier this year, current Hailo-based modules show limitations in running Transformer models efficiently, lack video stream encoding/decoding capabilities, and fail to meet the demands of running mainstream models like Llama3 or Qwen2.5.

NVR/NAS Smart Upgrades

For home NVRs and NAS products, M.2 2280 slots are typically reserved for expanding storage. In scenarios where users prioritize data privacy and prefer local analysis over cloud-based solutions, the M.2 intelligent inference card offers an effective way to bring AI-powered insights directly to local devices.

Industrial PC (IPC) Upgrades

In industrial applications, existing IPCs need additional computational power to deploy state-of-the-art visual or multimodal models for higher precision in tasks. For instance, Transformer-based models like DepthAnything or SAM can improve video processing accuracy. Adding the M.2 intelligent inference card mitigates the limitations of CPU-bound computing in these scenarios.

Robotics Applications

Robotics applications benefit from reduced computational strain on the main control platform by offloading visual perception tasks, especially those using Vision Transformer models. This solution enhances overall system stability and reliability.

Exploration of AIPC Capabilities

The M.2 intelligent inference card also enables the exploration of realistic AI PC (AIPC) requirements, countering inflated claims like the purported 40T computational demand from certain industry players.